The way to creating adaptable AI models that are equipped for thinking like individuals really do may not be taking care of them heaps of preparing information. All things being equal, another review proposes, all that matters is the way they are prepared. These discoveries could be a major move toward better, less mistake inclined man-made brainpower models and could assist with enlightening the insider facts of how computer based intelligence frameworks — and people — learn.

People are ace remixers. At the point when individuals grasp the connections among a bunch of parts, for example, food fixings, we can join them into a wide range of scrumptious recipes. With language, we can unravel sentences we’ve never experienced and form intricate, unique reactions since we handle the fundamental implications of words and the standards of punctuation. In specialized terms, these two models are proof of “compositionality,” or “precise speculation” — frequently saw as a critical standard of human discernment. “I think that is the most important definition of intelligence,” says Paul Smolensky, a cognitive scientist at Johns Hopkins University. “You can go from knowing about the parts to dealing with the whole.”

Genuine compositionality might be fundamental to the human psyche, yet AI designers have battled for a really long time to demonstrate that computer based intelligence frameworks can accomplish it. A 35-year-old contention made by the late scholars and mental researchers Jerry Fodor and Zenon Pylyshyn sets that the guideline might be far off for standard brain organizations. The present generative simulated intelligence models can copy compositionality, creating humanlike reactions to composed prompts. However even the most exceptional models, including OpenAI’s GPT-3 and GPT-4, actually miss the mark concerning a few benchmarks of this capacity. For example, in the event that you pose ChatGPT an inquiry, it could at first give the right response. Assuming you keep on sending it follow-up inquiries, in any case, it could neglect to remain on theme or start going against itself. This proposes that albeit the models can disgorge data from their preparation information, they don’t really get a handle on the importance and expectation behind the sentences they produce.

In any case, a clever preparation convention that is centered around forming how brain networks learn can support a simulated intelligence model’s capacity to decipher data the manner in which people do, as per a review distributed on Wednesday in Nature. The discoveries recommend that a specific way to deal with man-made intelligence training could make compositional AI models that can sum up similarly as well as individuals — to some degree in certain occurrences.

“This research breaks important ground,” says Smolensky, who was not involved in the study. “It accomplishes something that we have wanted to accomplish and have not previously succeeded in.”

To prepare a framework that appears to be fit for recombining parts and understanding the importance of novel, complex articulations, scientists didn’t need to construct a computer based intelligence without any preparation. “We didn’t need to fundamentally change the architecture,” says Brenden Lake, lead author of the study and a computational cognitive scientist at New York University. “We just had to give it practice.” The scientists began with a standard transformer model — a model that was a similar kind of man-made intelligence platform that upholds ChatGPT and Google’s Minstrel however come up short on earlier text preparing. They ran that essential brain network through an extraordinarily planned set of undertakings intended to show the program how to decipher a made-up language.

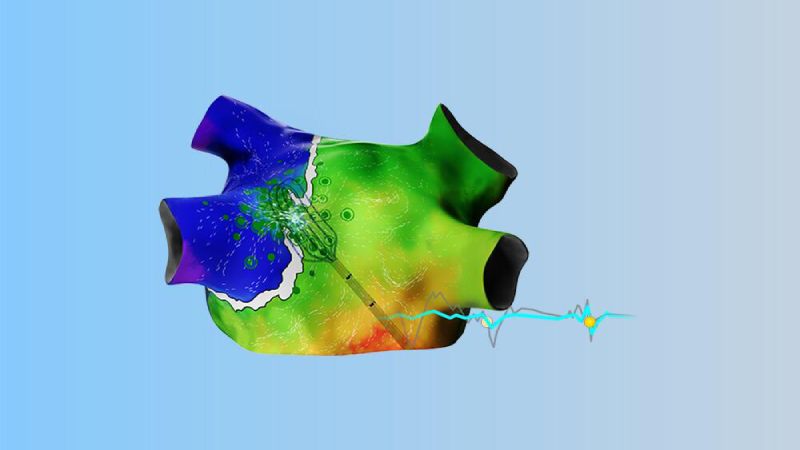

The language comprised of garbage words, (for example, “dax,” “carry,” “kiki,” “fep” and “blicket”) that “deciphered” into sets of bright spots. A portion of these concocted words were emblematic terms that straightforwardly addressed spots of a specific tone, while others connoted capabilities that changed the request or number of dab yields. For example, dax addressed a basic red speck, yet fep was a capability that, when matched with dax or some other emblematic word, duplicated its comparing spot yield by three. So “dax fep” would convert into three red dabs. The man-made intelligence preparing included no part of that data, in any case: the scientists just took care of the model a small bunch of instances of gibberish sentences matched with the comparing sets of dabs.

From that point, the review creators provoked the model to deliver its own series of spots because of new expressions, and they evaluated the computer based intelligence on whether it had accurately kept the language’s inferred guidelines. Before long the brain network had the option to answer intelligently, understanding the rationale of the gibberish language, in any event, when acquainted with new setups of words. This proposes it could “figure out” the made-up rules of the language and apply them to phrases it hadn’t been prepared on.

Moreover, the analysts tried their prepared man-made intelligence’s comprehension model might interpret the made-up language against 25 human members. They viewed that as, at its ideal, their advanced brain network answered 100% precisely, while human responses were right around 81% of the time. ( At the point when the group took care of GPT-4 the preparation prompts for the language and afterward asked it the test inquiries, the huge language model was just 58% exact.) Given extra preparation, the scientists’ standard transformer model began to impersonate human thinking so well that it messed up the same way: For example, human members frequently blundered by expecting there was a coordinated connection between unambiguous words and dabs, despite the fact that large numbers of the expressions didn’t follow that example. At the point when the model was taken care of instances of this way of behaving, it immediately started to imitate it and made the mistake with similar recurrence as people did.

The model’s exhibition is especially amazing, given its little size. “This is not a large language model trained on the whole Internet; this is a relatively small transformer trained for these tasks,” says Armando Solar-Lezama, a computer scientist at the Massachusetts Institute of Technology, who was not involved in the new study. “It was interesting to see that nevertheless it’s able to exhibit these kinds of generalizations.” The tracking down suggests that rather than simply pushing perpetually preparing information into AI models, a reciprocal methodology may be to offer computer based intelligence calculations what might be compared to an engaged semantics or polynomial math class.

Sun oriented Lezama says this preparing strategy could hypothetically give a substitute way to better man-made intelligence. “Once you’ve fed a model the whole Internet, there’s no second Internet to feed it to further improve. So I think strategies that force models to reason better, even in synthetic tasks, could have an impact going forward,” he expresses — with the proviso that there could be difficulties to increasing the new preparation convention. All the while, Sun oriented Lezama accepts such investigations of more modest models assist us with better comprehension the “black box” of brain organizations and could reveal insight into the purported rising skills of bigger artificial intelligence frameworks.

Smolensky adds that this review, alongside comparative work from here on out, could likewise help’s comprehension people might interpret our own psyche. That could end up being useful to us plan frameworks that limit our species’ blunder inclined inclinations.

In the present, be that as it may, these advantages stay speculative — and there are several major impediments. “Despite its successes, their algorithm doesn’t solve every challenge raised,” says Ruslan Salakhutdinov, a PC researcher at Carnegie Mellon College, who was not engaged with the review. “It doesn’t automatically handle unpracticed forms of generalization.” All in all, the preparation convention assisted the model with succeeding in one kind of errand: learning the examples in a phony language. Be that as it may, given a totally different errand, it couldn’t make a difference a similar expertise. This was obvious in benchmark tests, where the model neglected to oversee longer successions and couldn’t get a handle on beforehand unintroduced “words.”

What’s more, essentially, every master Logical American talked with noticed that a brain network fit for restricted speculation is totally different from the sacred goal of fake general knowledge, wherein PC models outperform human limit in many errands. You could contend that “it’s an extremely, little move toward that course, “it’s a very, very, very small step in that direction,” Solar-Lezama says. “But we’re not talking about an AI acquiring capabilities by itself.”

From restricted cooperations with man-made intelligence chatbots, which can introduce a deception of hypercompetency, and plentiful circling publicity, many individuals might have swelled thoughts of brain organizations’ abilities. “Some people might find it surprising that these kinds of linguistic generalization tasks are really hard for systems like GPT-4 to do out of the box,” Solar-Lezama says. The new study’s findings, though exciting, could inadvertently serve as a reality check. “It’s really important to keep track of what these systems are capable of doing,” he says, “but also of what they can’t.”

Business4 weeks ago

Business4 weeks ago

Entertainment3 weeks ago

Entertainment3 weeks ago

Business4 weeks ago

Business4 weeks ago

Business2 weeks ago

Business2 weeks ago

Business3 weeks ago

Business3 weeks ago

Business3 weeks ago

Business3 weeks ago

Technology4 weeks ago

Technology4 weeks ago

Technology4 weeks ago

Technology4 weeks ago