Teams at the Department of Energy’s SLAC National Accelerator Laboratory have taken the initial 3,200-megapixel advanced photographs—the biggest at any point made in a solitary effort—with a phenomenal exhibit of imaging sensors that will end up being the essence of things to come camera of Vera C. Rubin Observatory.

The pictures are enormous to such an extent that it would take 378 4K super top quality TV screens to show one of them in full size, and their goal is high to the point that you could see a golf ball from around 15 miles away. These and different properties will before long drive extraordinary astrophysical exploration.

Next, the sensor cluster will be coordinated into the world’s biggest advanced camera, at present under development at SLAC. Once introduced at Rubin Observatory in Chile, the camera will deliver all encompassing pictures of the total Southern sky—one display like clockwork for a long time. Its information will take care of into the Rubin Observatory Legacy Survey of Space and Time (LSST)— a list of a bigger number of systems than there are living individuals on Earth and of the movements of incalculable astrophysical items. Utilizing the LSST Camera, the observatory will make the biggest cosmic film ever and shed light on the absolute greatest secrets of the universe, including dull issue and dim vitality.

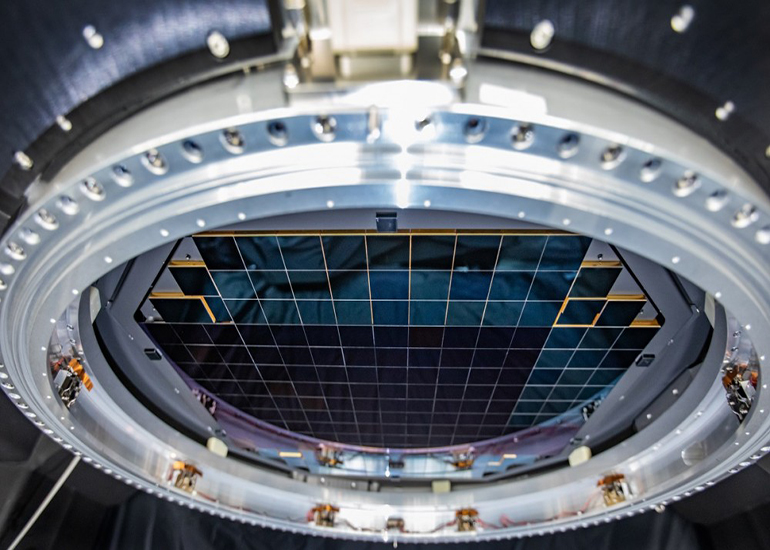

The primary pictures taken with the sensors were a test for the camera’s central plane, whose get together was finished at SLAC in January.

“This is a huge milestone for us,” said Vincent Riot, LSST Camera project manager from DOE’s Lawrence Livermore National Laboratory. “The focal plane will produce the images for the LSST, so it’s the capable and sensitive eye of the Rubin Observatory.”

SLAC’s Steven Kahn, overseer of the observatory, stated, “This accomplishment is among the most huge of the whole Rubin Observatory Project. The finish of the LSST Camera central plane and its fruitful tests is a gigantic triumph by the camera group that will empower Rubin Observatory to convey cutting edge galactic science.”

A technological marvel for the best science

As it were, the central plane is like the imaging sensor of an advanced customer camera or the camera in a phone: It catches light radiated from or reflected by an item and changes over it into electrical signs that are utilized to create a computerized picture. Yet, the LSST Camera central plane is considerably more modern. Truth be told, it contains 189 individual sensors, or charge-coupled gadgets (CCDs), that each bring 16 megapixels to the table—about similar number as the imaging sensors of most current computerized cameras.

Sets of nine CCDs and their supporting hardware were amassed into square units, called “science rafts,” at DOE’s Brookhaven National Laboratory and sent to SLAC. There, the camera group embedded 21 of them, in addition to an extra four forte pontoons not utilized for imaging, into a matrix that holds them set up.

The central plane has some genuinely phenomenal properties. In addition to the fact that it contains an incredible 3.2 billion pixels, however its pixels are additionally little—around 10 microns wide—and the central plane itself is amazingly level, differing by close to a tenth of the width of a human hair. This permits the camera to deliver sharp pictures in extremely high goal. At multiple feet wide, the central plane is gigantic contrasted with the 1.4-inch-wide imaging sensor of a full-outline buyer camera and sufficiently huge to catch a part of the sky about the size of 40 full moons. At long last, the entire telescope is structured so that the imaging sensors will have the option to spot objects 100 million times dimmer than those noticeable to the unaided eye—an affectability that would let you see a light from a huge number of miles away.

“These specifications are just astounding,” said Steven Ritz, project scientist for the LSST Camera at the University of California, Santa Cruz. “These unique features will enable the Rubin Observatory’s ambitious science program.”

More than 10 years, the camera will gather pictures of around 20 billion universes. “These information will improve our insight into how worlds have advanced after some time and will let us test our models of dull issue and dim vitality more profoundly and exactly than any other time in recent memory,” Ritz said. “The observatory will be an awesome office for an expansive scope of science—from nitty gritty investigations of our close planetary system to investigations of faraway items toward the edge of the noticeable universe.”

A high-stakes get together process

The fulfillment of the central plane recently finished up six nerve-wracking a long time for the SLAC team that embedded the 25 pontoons into their limited openings in the framework. To amplify the imaging territory, the holes between sensors on neighboring pontoons are under five human hairs wide. Since the imaging sensors effectively break on the off chance that they contact one another, this made the entire activity dubious.

The pontoons are additionally expensive—up to $3 million each.

SLAC mechanical specialist Hannah Pollek, who worked at the cutting edge of sensor incorporation, stated, “The combination of high stakes and tight tolerances made this project very challenging. But with a versatile team we pretty much nailed it.”

The colleagues went through a year getting ready for the pontoon establishment by introducing various “practice” pontoons that didn’t go into the last central plane. That permitted them to consummate the methodology of pulling every one of the 2-foot-tall, 20-pound pontoons into the network utilizing a particular gantry created by SLAC’s Travis Lange, lead mechanical specialist on the pontoon establishment.

Tim Bond, top of the LSST Camera Integration and Test group at SLAC, stated, “The sheer size of the individual camera components is impressive, and so are the sizes of the teams working on them. It took a well-choreographed team to complete the focal plane assembly, and absolutely everyone working on it rose to the challenge.”

Taking the initial 3,200-megapixel images

The central plane has been put inside a cryostat, where the sensors are chilled off to negative 150 degrees Fahrenheit, their necessary working temperature. Following a while without lab access due to the Covid pandemic, the camera group continued its work in May with restricted limit and following severe social separating necessities. Broad tests are presently in progress to ensure the central plane meets the specialized prerequisites expected to help Rubin Observatory’s science program.

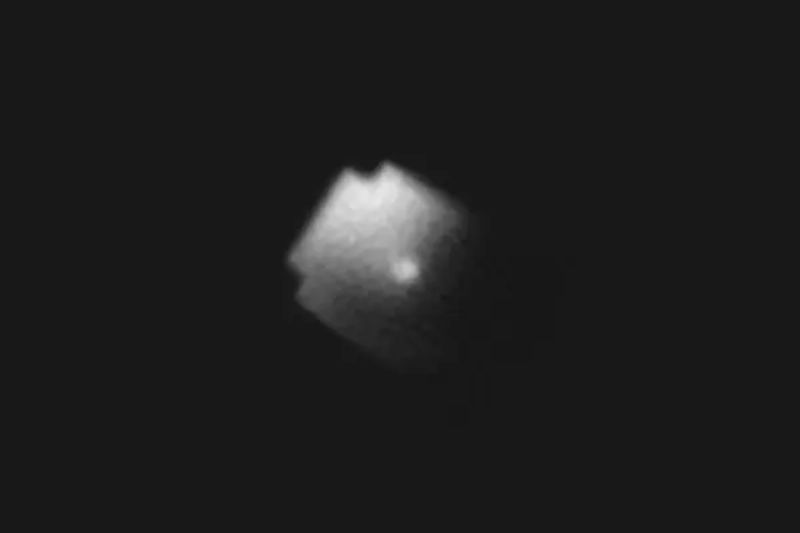

Taking the initial 3,200-megapixel pictures of an assortment of articles, including a Romanesco that was picked for its extremely itemized surface structure, was one of these tests. To do as such without a completely gathered camera, the SLAC group utilized a 150-micron pinhole to extend pictures onto the central plane. These photographs, which can be investigated in full goal on the web (joins at the base of the delivery), show the remarkable detail caught by the imaging sensors.

“Taking these pictures is a significant achievement,” said SLAC’s Aaron Roodman, the researcher answerable for the get together and testing of the LSST Camera. “With the tight determinations we truly pushed the constraints of what’s conceivable to exploit each square millimeter of the central plane and boost the science we can do with it.”

Camera group on the home stretch

Additional difficult work lies ahead as the group finishes the camera gathering.

In the following not many months, they will embed the cryostat with the central plane into the camera body and include the camera’s focal points, including the world’s biggest optical focal point, a screen and a channel trade framework for investigations of the night sky in various hues. By mid-2021, the SUV-sized camera will be prepared for definite testing before it starts its excursion to Chile.

“Nearing completion of the camera is very exciting, and we’re proud of playing such a central role in building this key component of Rubin Observatory,” said JoAnne Hewett, SLAC’s chief research officer and associate lab director for fundamental physics. “It’s a milestone that brings us a big step closer to exploring fundamental questions about the universe in ways we haven’t been able to before.”

Technology4 weeks ago

Technology4 weeks ago

Technology4 weeks ago

Technology4 weeks ago

Technology4 weeks ago

Technology4 weeks ago

Business4 weeks ago

Business4 weeks ago

Technology4 weeks ago

Technology4 weeks ago

Technology4 weeks ago

Technology4 weeks ago

Technology3 weeks ago

Technology3 weeks ago

Technology3 weeks ago

Technology3 weeks ago